DFS APIs¶

The DFS API's contain methods for reading, modifying and creating DFS files.

The DFS API is available in different forms, a C API and a .NET/C# API.

- The DFS C API is a set of exported C methods, which is available in C/C++ or other native environments

- The DFS .NET/C# API is a set of .NET classes and interfaces available in any .NET compatible environment.

How to get it¶

Check out the How to get it section.

On top of the two DFS API's, a number of toolboxes are available. Check out the Derivations

The DHI.DFS NuGet package contains all components required to build and run

applications for reading and writing DFS files on Windows.

It contains a mix of .NET assemblies and native libraries, and also includes

header files, lib files and build targets for C/C++.

DFS C API¶

Note

The DFS C API is available from version 19.0.0 (MIKE Release 2021).

The DFS C API is a set of exported C methods for reading, modifying and

creating DFS files. The API is exported and available in the ufs.dll.

The ufs.dll and dependent libraries is available as a NuGet package at

www.nuget.org/packages/DHI.DFS/.

It is possible to use this NuGet package directly in a C/C++ project in

Visual studio, which the DHI.MikeCore.CExamples example below also utilizes.

Class Library Documentation for C API¶

Detailed API documentation on each method is under construction (February 2021), and is currently provided on request.

Examples C/C++¶

Examples on its usage can be found in the GitHub repository:

specifically at:

DFS .NET/C# API¶

The DFS .NET API is a set of interfaces and classes for reading, modifying and creating DFS files from a .NET compatible environment.

The API is available through the namespace:

DHI.Generic.MikeZero.DFS

Note

The DFS .NET API does not currently support retrieving local level statistics.

Class Library Documentation for .NET/C#¶

The class library documentation provide details of every interface, class and method, and exists in the form of

-

Assembly documentation xml-file: DHI.Generic.MikeZero.DFS.xml

The assembly documentation file is located together with the assembly in the

DHI.DFS NuGet package. The assembly documentation xml file is used by IDE’s like

Visual Studio, making the documentation available within the

IDE. The documentation is also available in the Visual Studio Object Browser,

when loading the DHI.Generic.MikeZero.DFS.dll.

Examples¶

Examples are provided on GitHub - GitHub examples

It contains examples in C#, CS-script (C# scripting engine) and IronPython. Examples for Matlab are provided with the DHI Matlab Toolbox.

DFS API structure¶

The API is divided into two levels, each giving different access to the DFS functionality: The generic API and the specialised API’s.

The generic API allows for reading/modifying/creating DFS files with all the freedoms and restrictions that DFS impose.

The specialised API’s works with one type of DFS file and limits the use of DFS to what the type of DFS file utilises. For example, when using the API for a dfs2 file, that API assumes that all items are using the same 2D spatial axis, and does not allow access to the spatial axis on item level, only on file level.

The user should use the specialised API’s whenever possible. The generic API should only be used when the file at hand cannot be handled by one of the specialised APIs.

The following sections will give a short overview of the general API, while Section 4 will describe each specialised file type and their API.

Opening a DFS file – DfsFileFactory¶

The DfsFileFactory provides methods for opening existing DFS files. It currently

supports the following file types:

-

dfs1

-

dfs2

-

dfs3

-

dfsu (not all types)

-

generic dfs

If your DFS file type is not listed as one of the supported specialised types in the list above, you can always open it using the generic DFS functionality.

Each file can be opened in read mode, edit mode or append mode.

In read mode the data in the file cannot be updated. The file is opened for reading, and the file pointer is positioned at the first item-time step in the file.

In edit mode, the data in the file can be updated. The file pointer is positioned at the first item-time step, as in read mode.

In append mode the file is opened for editing, and the file pointer is positioned after the last item-time step in the file: If writing data to the file, the data will be appended.

The DfsFileFactory also provides support for reading and writing mesh files.

Generic DFS File – IDfsFile¶

The IDfsFile is the main entrance to a generic DFS file.

A file can be opened for reading, editing or appending by using one of the

static DfsFileFactory methods DfsGenericOpen, DfsGenericOpenEdit or

DfsGenericOpenAppend.

The IDfsFile provides functionality for reading and writing dynamic and static

items by extending the IDfsFileIO and IDfsFileStaticIO interfaces. Furthermore

it provides access to the header (FileInfo) and a list of item info defining

each of the dynamic items.

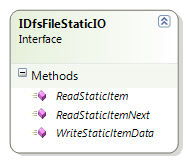

Accessing Static Items – IDfsFileStaticIO¶

The interface IDfsFileStaticIO provides functionality for reading and writing

static item and its data.

The read functions return an IDfsStaticItem which includes the specification of

the static item, and the static item data values. Use the ReadStaticItemNext to

iterate through all the static items. When no more static items are present,

null is returned.

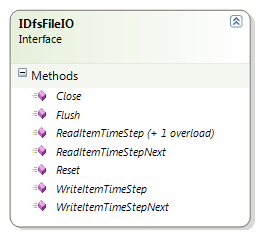

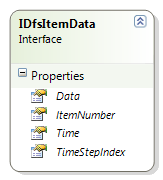

Accessing Dynamic Item Data – IDfsFileIO¶

The interface IDfsFileIO provides functionality for reading and writing dynamic

item data. To get information on the dynamic items use the IDfsFile.FileInfo

list.

The read functions return an IDfsItemData interface, which contains the item

number, the time of the time step and the data values.

Depending on the DfsSimpleType of the dynamic item being read, the IDfsItemData

can be cast to its generic type: If the dynamic item stores float values, it can

be cast to IDfsItemData\<float\>. Alternatively can the data from

IDfsItemData.Data be cast to the native array, example float[].

Regarding the ‘next’ version of the read and write functions: It reads/writes data for the next dynamic item-time step. If called as the very first read/write function, it returns the first time step of the first item. It then cycles through each time step, and each item in the time step.

For a file with 3 items, it returns (item-number, time step index) in the following order:

(1,0), (2,0), (3,0), (1,1), (2,1), (3,1), (1,2),...

If data is explicitly read for item-time step (2,4), next item-time step will be (3,4).

If one of the methods reading/writing static item data is called, the iterator is reset, and the next call to this method again returns the first item-time step. Similar for the static items, whenever a dynamic item is being read/written, the iterator for the static items is also reset.

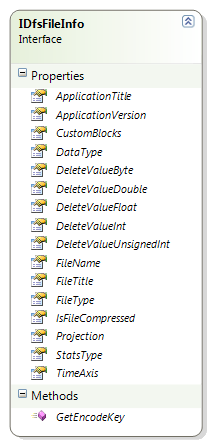

Header Information – IDfsFileInfo¶

The IDfsFileInfo interface provides access to data in the header, not including

those specifying the dynamic data. It is available from the IDfsFile interface

(the FileInfo property) and also from some of the specialised DFS file

interfaces, e.g. IDfs123File.

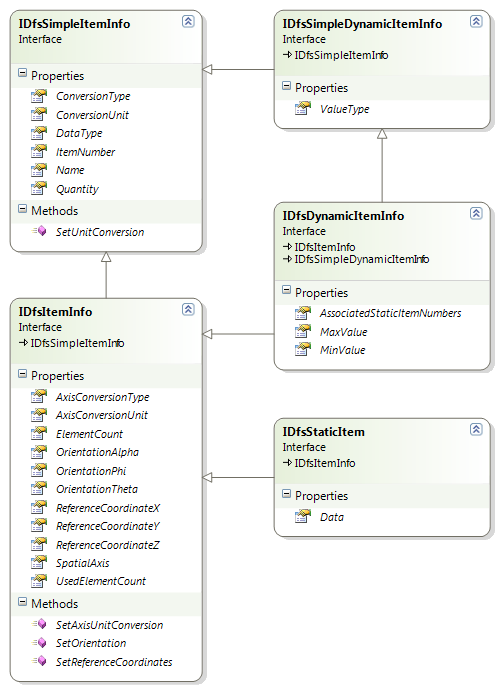

DFS Items¶

The item information exists in two versions: A full version and a simple version. The simple version hides the spatial information of the axis, i.e. spatial axis and reference coordinates and orientation is hidden.

Most of the DFS files do not allow individual items to have different spatial axis. Such files will provide the axis definition on the level of the file class, not on item level. Hence the item information for such files is limited to the simple version, without any spatial information.

For the dynamic item the versions are the full IDfsDynamicItemInfo interface and

the simple IDfsSimpleDynamicItemInfo interface.

For the static items, currently only the full version of the interface exists,

IDfsStaticItem.

Each DFS file type class includes a list of its dynamic items, either as a list

of IDfsDynamicItemInfo or as a list of IDfsSimpleDynamicItemInfo.

Temporal Axis – IDfsTemporalAxis¶

The IDfsTemporalAxis interface is the base interface for all the specialised

temporal axes: All the specialised temporal axes extend the IDfsTemporalAxis

interface.

The IDfsTemporalAxis interface has a TimeAxisType property that defines which of

the specialised time axis it really is. There is no temporal axis implementing

only the IDfsTemporalAxis interface.

The specialised temporal axes are:

-

IDfsEqCalendarAxis -

IDfsEqTimeAxis -

IDfsNonEqCalendarAxis -

IDfsNonEqTimeAxis

Based on the TimeAxisType the temporal axis object can be cast to its matching specialised temporal axis.

Spatial Axis – IDfsSpatialAxis¶

The IDfsSpatialAxis interface is the base interface for all specialised spatial

axes: All the specialised temporal axes extend the IDfsSpatialAxis interface.

The IDfsSpatialAxis interface has an AxisType property that defines which of the

specialised spatial axis it really is. There is no spatial axis implementing

only the IDfsSpatialAxis interface.

The specialised spatial axes are:

-

IDfsAxisEqD0 -

IDfsAxisEqD1 -

IDfsAxisEqD2 -

IDfsAxisEqD3 -

IDfsAxisEqD4 -

IDfsAxisNeqD1 -

IDfsAxisNeqD2 -

IDfsAxisNeqD3 -

IDfsAxisCurveLinearD2 -

IDfsAxisCurveLinearD3

Based on the AxisType the spatial axis object can be cast to its matching specialised spatial axis.

DFS Parameters – IDfsParameters¶

The IDfsParameters contains a number of parameters that can be set when

reading/editing DFS files. It is used as argument in the DfsFileFactory methods.

-

UbgConversion: When set, it will set the unit conversion to UBG for all items and item axis. Default is false. See Item unit conversion for details.

-

ModifyTimes: When set, the times will be modified as described in Appendix A. Default value is false.

-

EnablePlugin: When set, it will enable the DFS plugin, which for some DFS file types will produce derived dynamic items. For example can a file with the bathymetry in the static data, and water level in the dynamic data produce a surface elevation as an additional derived dynamic item.

Furthermore a set of data converters can be specified, which are described in the following section.

Data converters – IDfsDataConverter¶

An IDfsDataConverter can do conversion of an item and its data. A data converter

also has the ability to change the item properties, as the EUM quantity, the

data type etc. The following converters are provided with the .NET API:

-

DfsConvertFloatToDouble: This will convert item data from float to double precision numbers. It also updates the data type returned by the item, to double. -

DfsConvertToUbg: This will convert item data and item spatial axis to UBG units. See Item unit conversion for details. Compared to setting the unit conversion to UBG units, using this data converter will also change the item EUM type and unit. -

DfsConvertToBaseUnit: This will convert item data and item spatial axis to EUM base units (almost always SI). It also updates the item and spatial axis EUM type and unit to base units.

The converters are designed to return item data that are still consistent, i.e. when converting data to another unit, also the item quantity is updated to that unit.

Example: Assume you are developing a tool that does some analysis of DFS data,

basing the analysis on SI units and double precision data. Adding the two

converters, DfsConvertToBaseUnit and DfsConvertFloatToDouble, to the DFS

parameters, the tool need not bother which unit or type the data is stored in,

conversions are performed automatically when required.

Users can implement their own data converters, by implementing the

IDfsDataConverter interface. The converters described above are provided as part

of the example codes.

Creating New Generic DFS Files – DfsBuilder¶

The DfsBuilder class are used when creating new DFS files. The builder assures

that the DFS file is build and defined correctly, assuring that all data that is

required to be set, is set.

Only use the DfsBuilder class if one of the specialised DFS file builder classes

does not provide the desired functionality.

The DfsBuilder class works together with the DfsFactory class: The DfsFactory

class creates objects that can be used as arguments for the DfsBuilder methods,

as e.g. when specifying the temporal axis.

The builder works in two stages: The first stage all header information and information of the dynamic items is provided. In the second stage static items are added.

In the first stage the following header information must be provided:

-

Geographic map projection

-

Data type tag

-

Temporal axis

Furthermore, all the dynamic items must be added and specified correctly. For

that a DfsDynamicItemBuilder is used. For each dynamic item you must specify:

-

Name.

-

Quantity

-

Spatial axis

-

Type of data

-

Value type

The remainder of the functionality in the builders are optional:

-

File title

-

Application title and version number

-

Delete values

-

Custom blocks

And for the dynamic items the following optional parameters:

-

Value type; default is Instantaneous

-

Associates static items

-

Reference coordinates and orientation

During the first stage, you can at any time call the Validate function which will return a list of strings with errors. If the list is empty, you are ready to enter stage 2.

When the header and the dynamic items are fully defined, i.e. no validation

errors left, the CreateFile function can be called, and the builder will enter

stage 2. In the second stage any number of static items can be added. You can

use the IDfsStaticItemBuilder to build a generic static item. With the static

item builder, the following information is required:

-

Name.

-

Quantity

-

Spatial axis

-

Data

There are also a number of convenience functions for adding ‘simpler’ static items, by providing a name and a dataset only, and then dummy axis and quantity values will be used.

If/when all static items have been added, the GetFile method is called which

returns an IDfsFile. From that point the builder is no longer required (and is

actually invalidated). Dynamic item data is added by using the methods in the

IDfsFileIO interface.